Lectures

Important notes:

- We will upload lectures prior to their corresponding classes.

- [SIT770]: Indicates that the content provided is specifically tailored for students currently enrolled in SIT770.

-

Week 0: Course Overview

Week 0: Course Overview

Summary: Introduction and course overview.

[slides] [slides 6up]

Video recordings (23 Minutes and 19 Seconds):

-

Week 1: Information Retrieval Part 1

Week 1: Information Retrieval Part 1

Summary: Inverted indices, scoring, term weighting, and the vector space model.

[slides] [slides 6up]

Video recordings (1 Hour, 59 Minutes and 23 Seconds):

- Introduction to Information Retrieval (6:32)

- Term-document incidence matrices (7:10)

- The Inverted Index, the key data structure underlying modern IR (9:52)

- Query processing with an inverted index (5:23)

- Structured vs. Unstructured Data (3:31)

- Modeling in Information Retrieval (4:37)

- The Boolean Model (10:28)

- Phrase queries and positional indexes (10:02)

- The Vector Model (1:28)

- Ranked retrieval (6:08)

- Scoring documents (7:41)

- Term frequency (9:29)

- Collection Statistics (12:39)

- Weighting schemes (4:25)

- Vector space scoring (19:59)

-

Week 2: Information Retrieval Part 2

Week 2: Information Retrieval Part 2

Summary: Probabilistic IR and Evaluation methods.

[slides] [slides 6up]

Video recordings (1 Hour, 59 Minutes and 22 Seconds):

- Probabilistic IR model (1 Hour, 08 Minutes and 39 Seconds):

- IR Evaluation methods (50 Minutes and 43 Seconds):

-

Week 3: Text processing

Week 3: Text processing

Summary: Regular Expressions, Text Normalization, Edit Distance.

[slides] [slides 6up]

Video recordings (1 Hour, 54 Minutes and 32 Seconds):

- Regular Expressions (28 Minutes and 32 Seconds):

- Text Normalization (37 Minutes and 11 Seconds):

- [SIT770] Edit Distance (48 Minutes and 49 Seconds):

-

Week 4: N-gram Language Models

Week 4: N-gram Language Models

Summary: N-gram Language Models.

[slides] [slides 6up]

Video recordings (2 Hours, 01 Minutes and 23 Seconds):

- Language Models (1 Hour, 14 Minutes and 31 Seconds):

- Spelling Correction and the Noisy Channel 46 Minutes and 52 Seconds):

-

Week 5: Naïve Bayes and Sentiment Classification

Week 5: Naïve Bayes and Sentiment Classification

Summary: Naïve Bayes and Sentiment Classification.

[slides] [slides 6up]

Video recordings (2 Hours, 01 Minutes and 05 Seconds):

- Naïve Bayes and Sentiment Classification (1 Hour, 20 Minutes and 51 Seconds):

- The Task of Text Classification (9:59)

- The Text Classification Problem (5:53)

- The Naive Bayes Classifier (15:14)

- Naive Bayes: Learning (10:26)

- Sentiment and Binary Naive Bayes (11:13)

- More on Sentiment Classification (10:09)

- Naïve Bayes: Relationship to Language Modeling (5:08)

- Text Classification: Practical Issues (8:05)

- Avoiding Harms in Classification (4:44)

- Evaluation and Testing Techniques for Sentiment Analysis and Text Classification (40 Minutes and 14 Seconds):

- Naïve Bayes and Sentiment Classification (1 Hour, 20 Minutes and 51 Seconds):

-

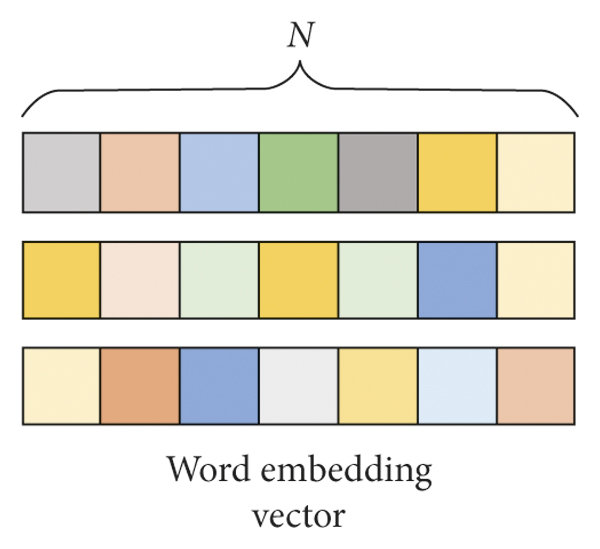

Week 6: Vector Embeddings

Week 6: Vector Embeddings

Summary: Vector Embeddings.

[slides] [slides 6up]

Video recordings (1 Hour, 12 Minutes and 07 Seconds):

-

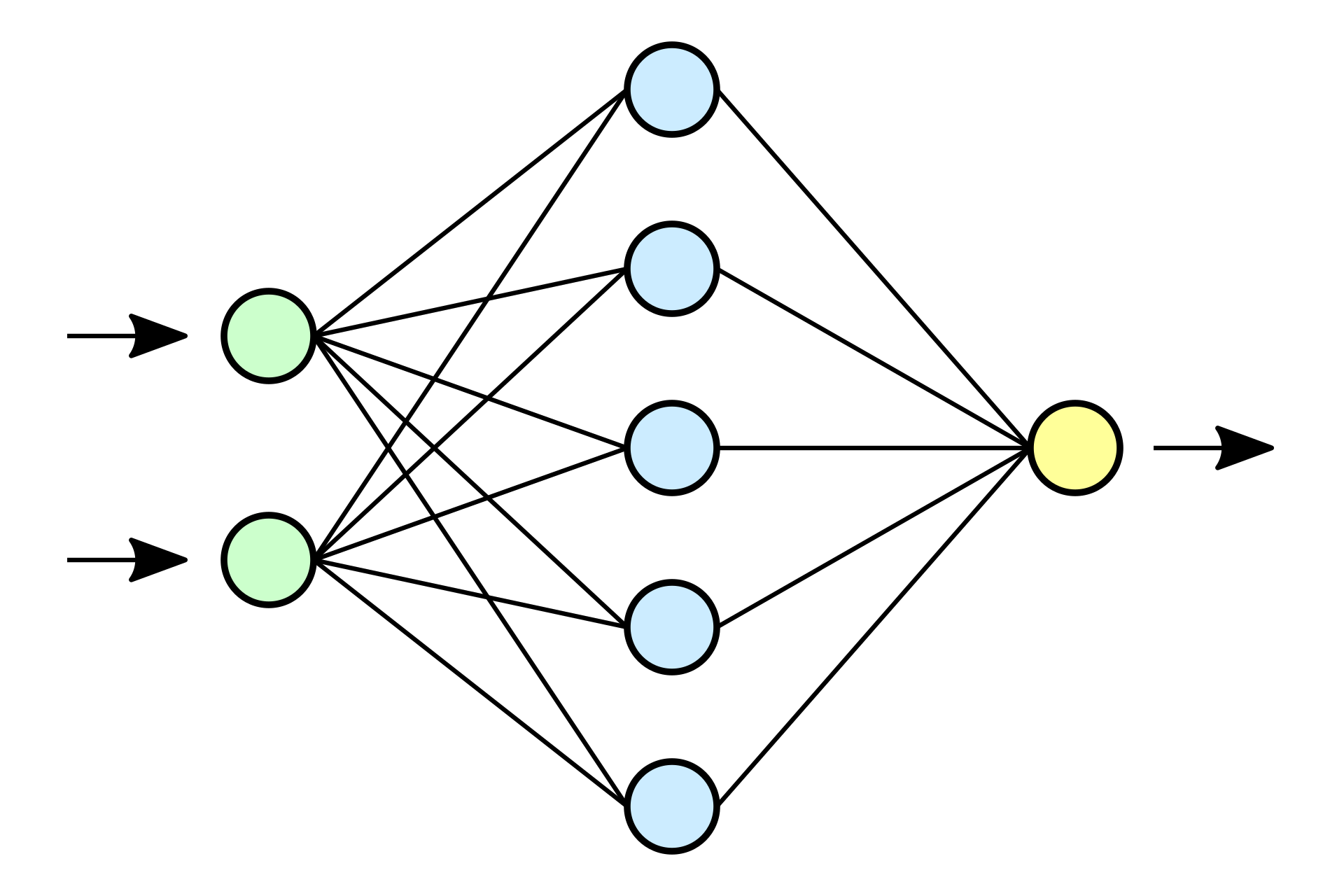

Week 7: Neural Networks and Neural LMs

Week 7: Neural Networks and Neural LMs

Summary: Neural Networks and Neural LMs.

[slides] [slides 6up] [Notes]

Video recordings (1 Hour, 29 Minutes and 39 Seconds):

- Introduction (1:01)

- Introduction to Neural Nets (1 Hour, 13 Minutes and 06 Seconds):

- Neural Networks Overview (4:26)

- Neural Network Representation (5:14)

- Computing a Neural Network’s Output (9:57)

- Vectorizing across multiple examples (9:05)

- Explanation for Vectorized Implementation (7:37)

- Activation functions (10:56)

- Derivatives of activation functions (7:57)

- Gradient descent for Neural Networks (9:57)

- Random Initialization (7:57)

- Applying feedforward networks to NLP tasks (15:32)

-

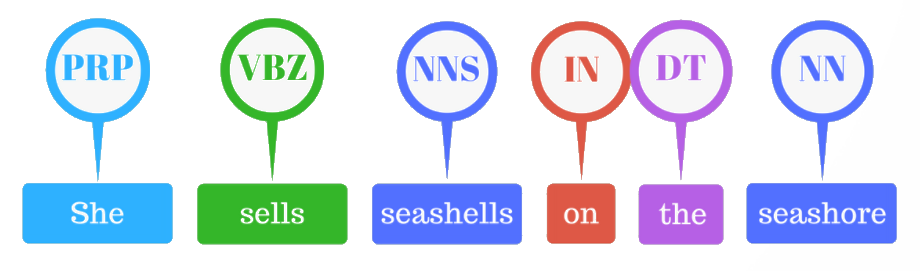

Week 8: Sequence Labeling

Week 8: Sequence Labeling

Summary: Sequence Labeling.

[slides] [slides 6up]

Video recordings (1 Hour, 27 Minutes and 01 Seconds):

-

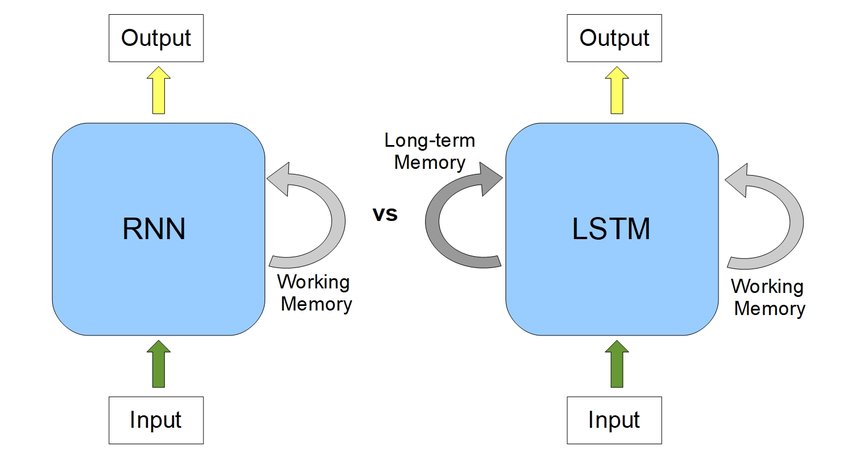

Week 9: RNNs and LSTMs

Week 9: RNNs and LSTMs

Summary: RNNs and LSTMs.

[slides] [slides 6up] [Notes]

Video recordings (2 Hours, 34 Minutes and 11 Seconds):

- Why sequence models? (3:00)

- Notation (9:15)

- Recurrent Neural Network Model (16:31)

- Different types of RNNs (8:33)

- Language model and sequence generation (12:01)

- Sampling novel sequences (8:38)

- Vanishing gradients with RNNs (6:28)

- Gated Recurrent Unit (GRU) (17:06)

- Long Short Term Memory (LSTM) (9:53)

- Bidirectional RNN (8:19)

- Deep RNNs (5:16)

- Basic Models (6:18)

- Picking the most likely sentence (8:56)

- Beam Search (11:54)

- Attention Model Intuition (9:41)

- Attention Model (12:22)

-

Week 10: Transformers and Pretrained LMs

Week 10: Transformers and Pretrained LMs

Summary: Transformers and Pretrained LMs.

[slides] [slides 6up]

Video recordings (2 Hours, 05 Minutes and 14 Seconds):

- Transformers: Attention Is All You Need! (1 Hour, 10 Minutes and 29 Seconds)

- Pre-trained LMs (54 Minutes and 45 Seconds)